NVIDIA HGX H200 Server Options

Blackwell Cloud offers customizable server configurations, featuring up to 8x NVIDIA HGX™ H200 GPUs, manufactured by various OEMs.

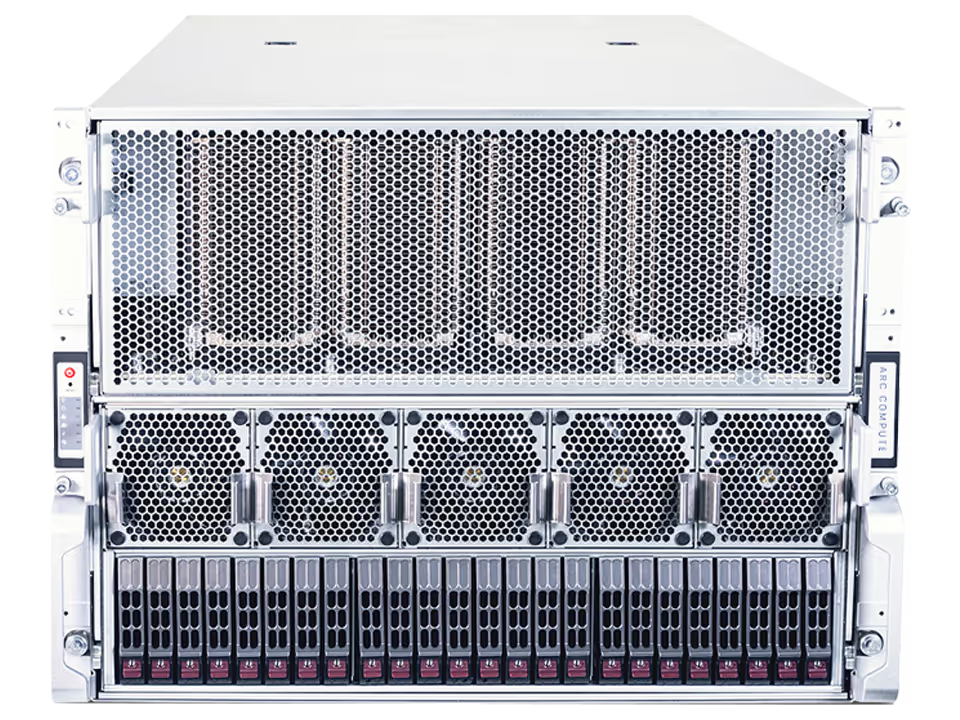

NVIDIA HGX H200 6U

Dell PowerEdge XE9680